- The SEOs Diners Club

- Posts

- 🔥 The SEOs Diners Club - Issue #173 - Weekly SEO Tips & News

🔥 The SEOs Diners Club - Issue #173 - Weekly SEO Tips & News

By Mert Erkal, SEO Strategist & Conversion Expert with 15+ years of experience

The Game Has Changed (Again)

We're living through the most significant shift in search since Google first crawled the web, not because of another algorithm update or penalty threat, but because the very language of search is evolving from keyword matching to semantic understanding.

This week brought us tools that fundamentally change how we approach search engine optimisation (SEO). Screaming Frog v22 didn't just add features—it delivered semantic analysis capabilities that mirror how Google thinks. Meanwhile, Google's AI Mode is rolling out across the US, and we're watching the search landscape transform in real-time.

After 15 years in this industry, I can tell you: the SEOs who adapt to semantic search thinking will thrive. Those who cling to keyword density calculations and exact-match strategies will be left behind.

Let's dive into what matters this week.

Screaming Frog's Semantic Revolution: The Tool That Thinks Like Google

When Your Crawler Gets an AI Brain

Screaming Frog SEO Spider version 22.0, codenamed "Knee-Deep," represents a watershed moment for technical SEO. This isn't just another update—it's the first mainstream SEO tool to integrate Large Language Model embeddings directly into website analysis.

Think about this: Google has been using semantic understanding since Word2Vec in 2013 and the Hummingbird update. For over a decade, we've been analysing websites with keyword-counting tools while Google has been thinking in concepts, relationships, and meaning.

That gap just closed.

Understanding Vector Embeddings: The Map of Meaning

Vector embeddings sound technical, but the concept is elegantly simple. Imagine you have a massive map of meaning where every word, sentence, and document gets assigned coordinates based not on what letters it contains, but on what it means.

Pages about "automobiles" and "cars" would sit close together on this semantic map, even though they share no common letters. Content about "dog training" and "puppy behaviour" would cluster together because they address related concepts. Meanwhile, a random page about "chocolate recipes" would sit far away from both.

This is exactly how vector embeddings work. They convert text into numerical coordinates in a high-dimensional space where semantic similarity translates to mathematical proximity. Google's been using this technology for years to understand search queries and match them with relevant content.

Now, Screaming Frog gives us the same capability.

Four Game-Changing Features

Duplicate and Semantically Similar Content Detection

This goes far beyond traditional duplicate content analysis. The tool can now identify pages that cover the same topic using completely different vocabulary. This is crucial for spotting content cannibalisation—where multiple pages compete for the same semantic space without you realising it.

When Screaming Frog shows similarity scores above 0.95, you're looking at pages that are dangerously close in meaning. These are consolidation opportunities waiting to strengthen your site's topical authority.

Low Relevance (Off-Topic) Content Detection

The system calculates the semantic centre of your entire website by averaging all page embeddings, then measures how far each page sits from this centre. Pages scoring below 0.4 relevance are essentially orphaned from your site's main themes.

This feature is pure gold for large websites that have accumulated off-topic content over time. You can now systematically identify and address pages that dilute your site's focus.

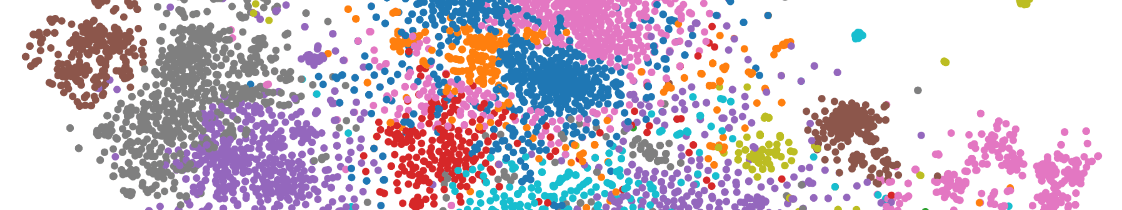

Content Cluster Visualisation

The Content Cluster Diagram provides a bird's-eye view of your site's semantic landscape. You'll see how related content naturally groups together, identify strong topical clusters, and spot gaps in your coverage.

Off-topic content appears as isolated dots on the diagram's edges. This visual analysis makes strategic decisions about content architecture much clearer.

Semantic Search

Perhaps the most exciting feature: you can enter any query and find pages that are semantically similar to that query, regardless of exact keyword matches. This transcends simple keyword searching to find content based on actual meaning and intent.

Setting Up Semantic Analysis: A Step-by-Step Guide

Getting these features running requires Screaming Frog SEO Spider v22 with a paid license. Here's your setup roadmap:

Choose an AI Provider

Connect to a reliable AI provider's API service—OpenAI, Gemini, or Ollama. Have your API key ready.

Add Embeddings Prompt from Library

Navigate to 'Prompt Configuration' and use 'Add from Library' to select the pre-configured embeddings setting. This prompt uses the optimised 'SEMANTIC_SIMILARITY' task type.

Connect to API

Ensure your API connection is active under 'Account Information.' This connection enables automatic embedding generation during crawls.

Enable HTML Storage

Go to 'Config > Spider > Extraction' and activate both 'Store HTML' and 'Store Rendered HTML.' This ensures the page text gets stored and becomes available for vector embedding generation.

Activate Embeddings Functionality

Navigate to 'Config > Content > Embeddings' and turn on 'Enable Embedding functionality.' Also, check 'Semantic Similarity' and 'Low Relevance' options to display relevant columns and filters in the 'Content' tab.

Crawl Your Website

Enter your target website URL and hit 'Start.' Wait for both crawl and API progress bars to reach 100%.

Run Crawl Analysis

For 'Semantically Similar' and 'Low Relevance Content' filters to work, initiate analysis after crawling completes. Do this manually via 'Crawl Analysis > Start' or enable 'Auto-Analyse at End of Crawl' under 'Crawl Analysis > Configure.'

Review Results

Examine 'Semantically Similar' and 'Low Relevance Content' filters in the 'Content' tab. Also, explore the 'Content Cluster Diagram' under 'Visualisations.'

Real-World Applications That Transform SEO

Content Audits and Strategy

Identify semantically similar pages competing for the same search intent. Consolidate these pages to strengthen topical authority. Find low-relevance content that dilutes your site's focus and either update, merge, or remove these pages. Use the Content Cluster Diagram to spot content gaps and plan strategic expansion.

Internal Linking Optimisation

The "Duplicate Details" tab and semantic similarity filters reveal logical internal linking opportunities between related content. This improves user experience, site navigation, and page authority distribution. Semantic search helps you quickly find all content related to specific topics for strategic internal link building.

Keyword Mapping and Relevance Calculations

Measure how semantically relevant your pages are to specific keywords, moving beyond simple keyword density metrics. Vectorise your keyword lists and compare them with page embeddings to determine which pages best match which queries. Calculate relevance scores using cosine similarity between page and keyword embeddings, scoring content relevance on a 0-100 scale.

Redirect Mapping During Site Migrations

Use semantic analysis to map old URLs to their most semantically similar new URLs during site migrations. This approach minimises soft 404s that Google might detect when traditional exact-match redirects fall short.

Competitor Analysis

Crawl competitor websites to analyse their content clusters and semantic relevance patterns. See how comprehensively competitors answer specific queries compared to your content. Use these insights to fine-tune your own content strategy.

Link Building Target Identification

Analyse potential link source pages to determine their semantic relevance to your target pages objectively. This analysis helps you avoid irrelevant links and build more valuable, contextually appropriate backlinks.

Maximising Results: Tips and Limitations

Optimise Content Area

Embedding quality directly correlates with content quality. Exclude repetitive template text like menus, footers, and cookie notices for more accurate embeddings. Use HTML tag, class, or ID inclusion/exclusion options under Config > Content > Area.

Boost Performance

Reducing vector embedding dimensions can significantly improve processing speed on lower-performance machines. This optimisation saves time when analysing large websites.

Handle Large Pages

Very long pages might exceed AI providers' token limits and disrupt analysis. Enable 'Limit Page Content' to restrict content to specific character limits when encountering these situations.

Adopt Hybrid Approaches

While semantic similarity excels at detecting exact and near-duplicate content, traditional text matching algorithms catch different types of similarities. Using both methods in parallel provides more comprehensive and reliable results.

Maintain Human Analysis

Despite this tool's power, results won't always be perfect or reliable in all scenarios. Always apply critical thinking and contextual analysis rather than blindly trusting data.

Ensure Model Consistency

Avoid comparing embeddings from different sources. These might have different lengths or come from different language models, leading to incorrect conclusions.

The Psychology of Large Language Models: Understanding Our New AI Colleagues

Behind the Digital Curtain

When you first encountered ChatGPT, how did it make you feel? Amazed, surprised, maybe even a little intimidated? These "digital wizards" demonstrate extraordinary capabilities, but what's happening behind the curtain?

Understanding Large Language Models (LLMs) isn't just about curiosity. To use these powerful tools effectively and extract maximum value, we need to understand their internal workings, operating principles, and limitations.

Stage 1: Pre-training - Compressing the Internet

An LLM's origin story begins with a massive data collection operation. This process resembles trying to fit the entire internet onto a hard drive.

Digital Treasure Hunting

The raw material for LLM training is the internet itself. Organisations like Common Crawl systematically scan 2.7 billion web pages as of 2024, collecting this vast ocean of information. However, this raw data can't be used directly.

Imagine having a library containing both Shakespeare's works and spam emails, both scientific papers and malware sites. This is where aggressive filtering and deduplication processes kick in. Spam sites, malware-hosting platforms, racist content, and adult materials get carefully filtered out. HTML and CSS codes get cleaned, content in different languages gets separated (FineWeb dataset is 65% English), and personal information gets masked.

The result? Despite the internet's massive size, a quality dataset like FineWeb reaches a manageable 44 terabytes. This represents a collection of the highest quality and most diverse documents distilled from billions of web pages.

Machine Language: Tokenisation

Neural networks can't directly understand the language we speak. Words and sentences are mysteries to them. This is why texts need conversion into small particles called tokens.

This process, starting with 0s and 1s, became much more sophisticated with advanced algorithms like Byte Pair Encoding (BPE). GPT-4's vocabulary contains 100,277 different tokens. The FineWeb dataset transforms into a massive 15 trillion token puzzle after this conversion.

Statistical Learning Magic

When token sequences are ready, the real magic begins. The neural network starts learning hidden connections, patterns, and relationships among these billions of tokens. The goal is simple but complex: "What token is most likely to come after this token?"

The model examines data in "windows" that can vary up to 8,000 tokens. Each time it tries to predict the next token, it makes mistakes, corrects itself, and tries again. With GPUs' parallel processing power, this process repeats billions of times.

The result of this intensive training is a base model. This model works like a lossy compression of the internet, capable of mimicking any document style—a massive token simulator. However, it's not yet an assistant that can converse with you—just a system that has memorised internet document patterns.

Stage 2: Post-training - Birth of Assistant Models

While the base model is an excellent "internet mimicking machine," it's not useful as an assistant that provides logical answers to your questions. The second stage begins here.

Supervised Fine-tuning (SFT): Gaining Personality

At this stage, the model trains with specially prepared human-assistant conversations instead of internet documents. Following companies' values like "helpful, honest, and harmless," human labellers create thousands of example conversations.

Modern approaches include existing LLMs in this process. The SFT process is much shorter than pre-training (just 3 hours) but is dramatically effective. The model acquires an assistant personality and adopts human labellers' behavioural patterns.

Interesting fact: When you talk with ChatGPT, you're interacting with a statistical simulation of data labellers working at OpenAI.

Reinforcement Learning (RL): Learning to Think

RL is the revolutionary stage that transforms the model from mere imitation into a real problem solver. Like a student solving practice problems in school, the model generates multiple solutions for a problem, with correct approaches being reinforced while wrong ones get weakened.

During this process, the model develops "chains of thought" that human labellers could never teach. This phenomenon observed in models like DeepSeek R1 is truly fascinating: The model examines a problem from different angles, checks results, formulates equations, as if it's thinking.

Like AlphaGo surpassing humans in chess and Go games, RL's potential in LLMs appears limitless.

Reinforcement Learning from Human Feedback (RLHF): Learning Human Taste

Some areas don't have clear right-wrong distinctions. In topics like creative writing, humour, and summarisation, "quality" is a subjective concept. RLHF enters here.

The system trains a separate "reward model" to simulate human preferences. Human evaluators rank the model's different outputs (like jokes), and the reward model learns these preferences. This allows the model to produce content aligned with human preferences without constantly needing human approval.

However, there's a trap: Since the reward model is also a neural network, it can be "fooled." The model might generate "adversarial examples" that are meaningless to humans but appealing to the reward model. Therefore, RLHF gets used carefully and for limited durations.

The "Psychology" and Limitations of LLMs

While LLMs demonstrate superhuman capabilities, confusing them with human minds would be a major mistake. These digital entities have their own unique "psychology."

Hallucinations: Confident-Looking Lies

One of LLMs' biggest dilemmas is their ability to generate completely fabricated information with great confidence, even on topics they don't know. By mimicking the confident style in training data, they can make false claims.

Two main strategies address this problem: "I don't know" responses get added to training sets to help models honestly say "I don't know" when they truly don't. Tool usage allows models to refresh their memory by accessing external tools like web search, with special tokens like search_start triggering searches and fresh information being placed in working memory for response generation.

"They Need Tokens to Think": Mental Capacity Limits

The computation that the model can perform for each token generation is limited. Therefore, they need to spread their reasoning across multiple tokens to solve complex problems.

Solving math problems step-by-step allows the model to make small calculations at each stage and keep previous results in memory. Due to this limitation, models struggle with direct counting and character-by-character spelling tasks.

Their Own Identities: Identity Illusion

LLMs aren't real people and lack persistent self-awareness. They get "booted" from scratch in each conversation. Identity information like "I'm ChatGPT, developed by OpenAI" mostly consists of hallucinations from internet data or system messages.

The "Swiss Cheese" Model: Random Gaps

LLMs' capabilities resemble Swiss cheese: While demonstrating excellent performance in most areas, they can make simple errors at unexpected and random points. They might solve Olympic-level math problems, then make mistakes on simple comparisons like "which is bigger, 9.11 or 9.9?"

This proves they're statistical systems by nature.

Google AI Mode: The Search Revolution Goes Mainstream

When AI Becomes Default

Google took its AI-focused search vision a step further. "AI Mode," announced three weeks ago at Google I/O, is now being rolled out to all users in the US. Moreover, this transition doesn't require users to manually enable this mode—the search experience is transforming automatically.

The implications for SEO professionals are profound. When AI-powered search becomes the default experience, our entire approach to optimisation must evolve.

Key Highlights

AI Mode appears as a new tab on Google's search page. This mode is particularly activated for queries requiring comparison, analysis, and exploration. It includes multimodal AI integration supporting text, voice, and visual search. The "query fan-out" technique simultaneously scans multiple topics to provide comprehensive answers. Searches conducted through this mode will be categorised the same as classic web searches in Search Console.

Strategic Adaptations for SEO

Optimise your complex queries for AI Mode, which now provides user-personalised, in-depth answers. When creating content, abandon classic "keyword stuffing" methods and instead create comprehensive and contextual content. Invest in multimodal SEO by aligning your visual, audio, and written content. When reviewing performance reports, consider AI Mode's effects—increased impressions or decreased clicks might reflect this transition.

The shift represents more than interface changes. Google is fundamentally changing how search results get generated and presented. Content that serves AI understanding will increasingly outperform content optimised solely for traditional ranking factors.

Google Removes 7 Rich Results: Simplifying the SERP

The Great Simplification

Google announced on June 12, 2025, that it will no longer support "Book Actions," "Course Info," "Claim Review," "Estimated Salary," "Learning Video," "Special Announcement," and "Vehicle Listing" structured data types. This decision aims to simplify the search results page. According to Google, these data types are rarely used and don't provide extra value for users.

What This Means for SEOs

Seven rich result types are being removed and will no longer appear as rich cards in Google SERPs. Google's reasoning is clear: they want a clean, focused, and user-friendly search experience. Changes will be implemented gradually over several weeks or months. Other structured data remains protected—Google still supports approximately 30 rich result types.

Action Items

Don't delete old data completely—removing eliminated schemas isn't necessary since other search engines (like Bing, Yandex) might still use this data. Update your schema strategy by using only actively supported data types to optimise visibility. Monitor Search Console tracking since rich results reports might show declines. Strengthen content UX since if rich card losses occur, enhance titles and meta descriptions that boost your content's clickability.

This simplification reflects Google's broader strategy of reducing SERP complexity while maintaining the most valuable enhancements for users.

AI World This Week: Tools, Services, and Insights

The Rapid Evolution Continues

Google is testing a new feature in search results: "Audio Overview." This feature provides AI-powered short audio summaries, giving users the opportunity to learn about topics by listening. Tests are currently limited to mobile users in the US and restricted to English searches. This innovation could increase accessibility for users with different content consumption preferences.

OpenAI introduced O3 Pro, a new member of its advanced AI model series. O3 Pro has more advanced reasoning capabilities, higher accuracy rates, and greater information processing capacity compared to the previous O3 model. It's designed to provide more reliable results, particularly in complex tasks like software development, data analysis, and logical problem-solving. This new model represents a significant step, especially for enterprise users and professional AI applications.

BrightEdge's "The Open Frontier of Mobile AI Search" report reveals that AI-powered searches are effective on desktop but remain limited on mobile. More than 90% of referral traffic from AI search engines still comes from desktop devices. This situation shows that despite mobile traffic constituting the majority, the AI search impact remains weak on mobile. While Google stands out as the only platform in mobile AI referrals, the difference is still low. Potential changes in Apple's Safari browser could fundamentally affect the mobile AI search market.

The Mobile AI Gap

This mobile limitation presents both a challenge and an opportunity. As AI search capabilities expand to mobile devices, early adopters who optimise for mobile AI experiences will gain significant advantages.

The Semantic Future: Where We Go From Here

After 15 years in SEO, I've witnessed numerous shifts—from PageRank to Panda, from keyword stuffing to user intent. But this semantic revolution feels different. It's not just another algorithm update; it's a fundamental change in how search engines understand and process information.

The tools we've discussed this week—Screaming Frog's semantic analysis, Google's AI Mode, and the evolution of LLMs—represent the infrastructure of future search. The question isn't whether semantic search will dominate; it's how quickly we adapt our strategies.

The New SEO Mindset

Traditional SEO asked: "What keywords does this page target?"

Semantic SEO asks: "What concepts does this content explore, and how do they relate to user intent?"

Traditional SEO optimised for specific queries.

Semantic SEO optimises for topic clusters and conceptual relationships.

Traditional SEO measured keyword density.

Semantic SEO measures semantic relevance and topical authority.

The winners in this new landscape will be those who build genuine topical authority, not through keyword manipulation, but through comprehensive, interconnected content that demonstrates a deep understanding of subject matter.

This means:

Creating content clusters that explore topics from multiple angles rather than targeting isolated keywords. Building internal linking structures based on semantic relationships rather than arbitrary anchor text strategies. Developing expertise that search engines can recognise through consistent, high-quality content across related concepts.

The Competitive Advantage

While most SEOs still think in keywords, those who master semantic analysis will gain significant competitive advantages. They'll identify content opportunities others miss, spot cannibalisation others can't detect, and optimise for search intent others don't understand.

The future belongs to SEOs who think like search engines—semantically, conceptually, and with a genuine understanding of user intent.

Until next week, keep optimising!

Best,

Mert

Would You Like to Support My Newsletter?

I love sharing the latest developments and strategies in the SEO world with you. If you find my content useful, you can support me by buying me a coffee. ☕ Click on 'Buy Me a Coffee' to contribute to knowledge sharing. Let's achieve more together!

© 2022-2025 SEOs Diners Club by Mert Erkal